🩺 RadEval Debuts!

🩺 Revolutionizing Radiology Text Evaluation with AI-Powered Metrics

Imagine having a comprehensive evaluation framework that doesn’t just measure surface-level text similarity, but truly understands clinical accuracy and medical semantics in radiology reports. This vision is now a reality with RadEval, a groundbreaking, open-source evaluation toolkit designed specifically for AI-generated radiology text.

📊 All-in-one metrics for evaluating AI-generated radiology text

From traditional n-gram metrics to advanced LLM-based evaluations, RadEval provides 11+ different evaluation metrics in one unified framework, enabling researchers to thoroughly assess their radiology text generation models with domain-specific medical knowledge integration.

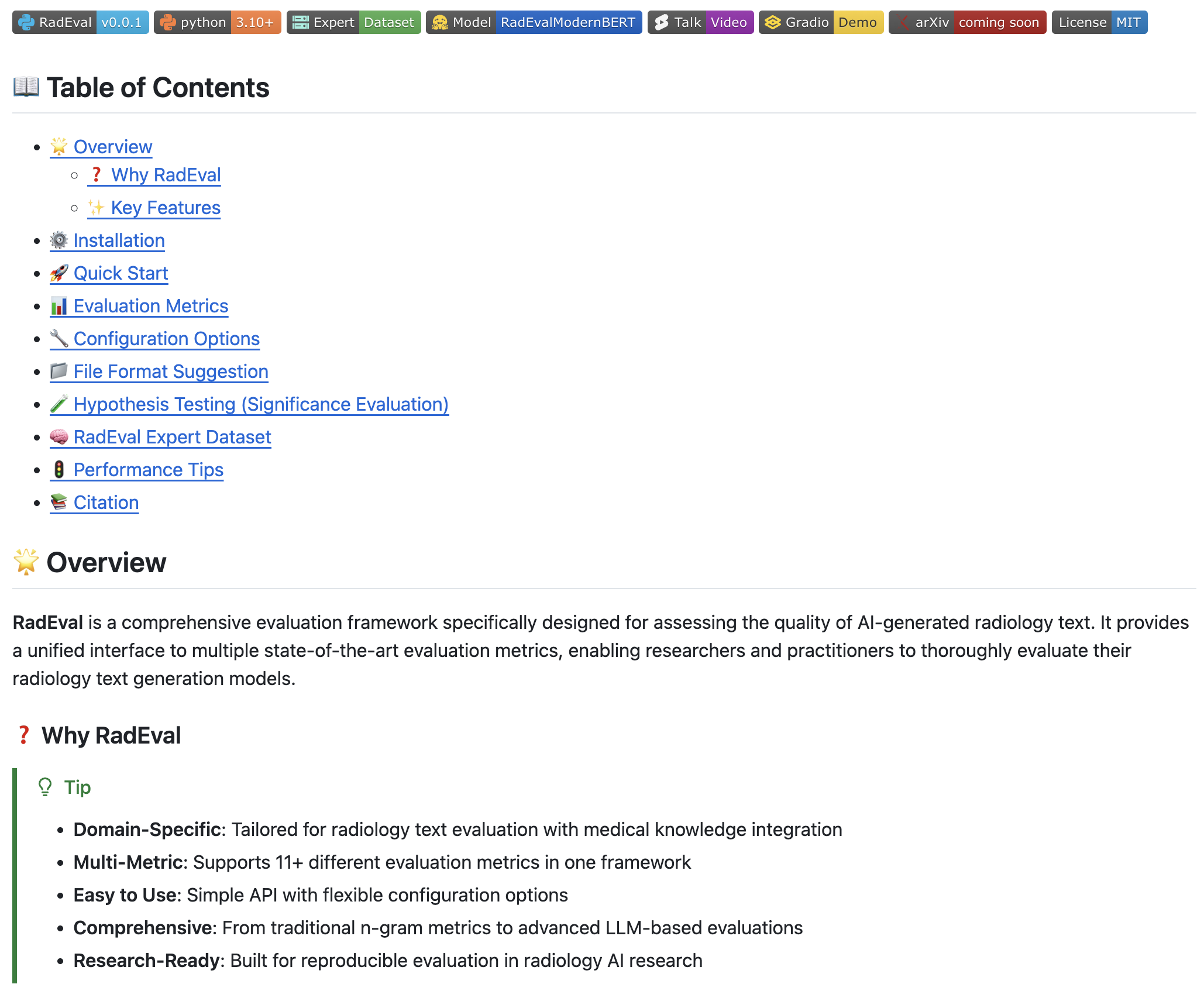

For detailed handbook, please visit our GitHub repository:

🚀 Quick Start Demo

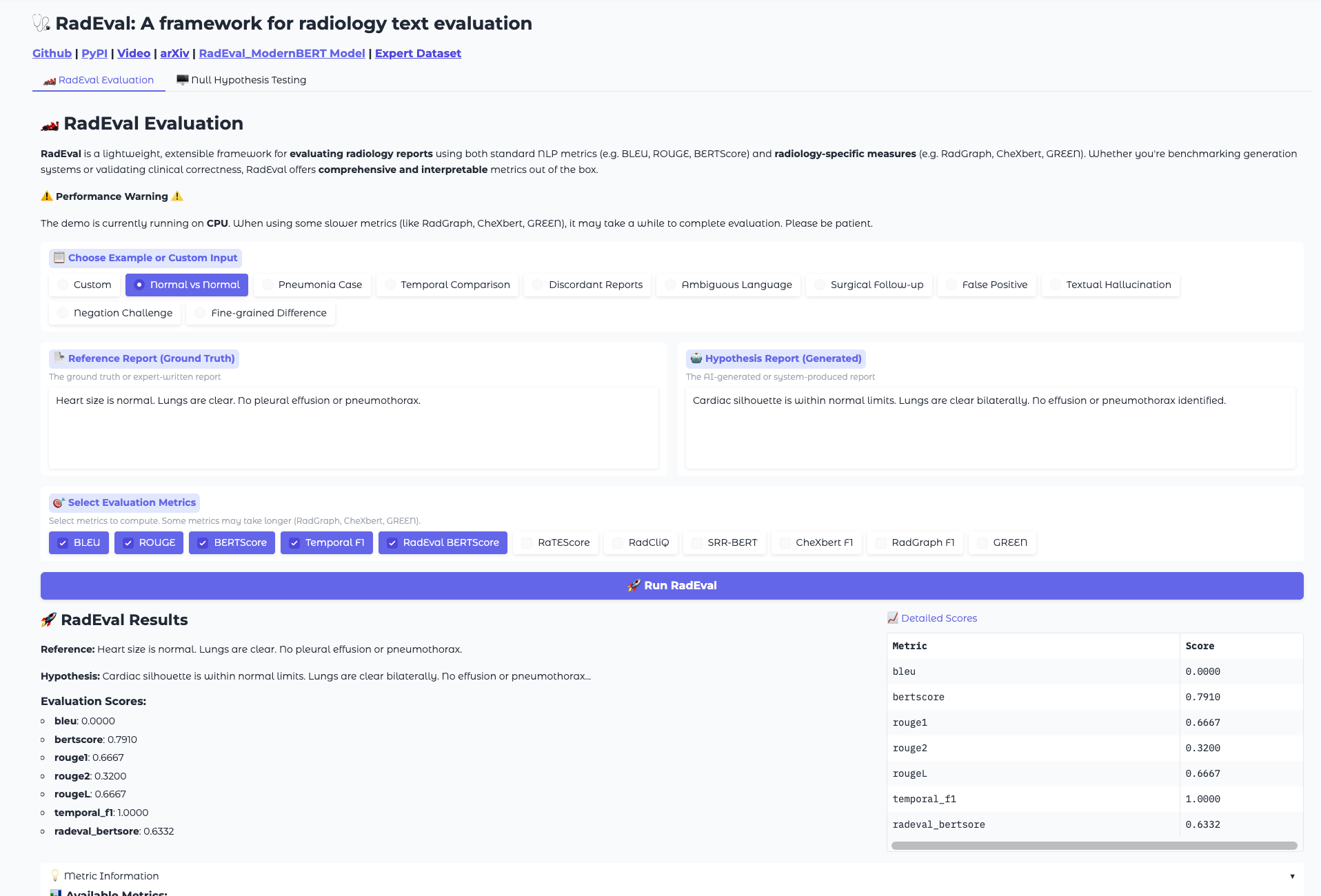

Try RadEval instantly with our interactive Gradio demo:

💡 Key Features

RadEval stands out with its comprehensive approach to radiology text evaluation:

- 🎯 Domain-Specific: Tailored for radiology with medical knowledge integration

- 📈 Multi-Metric: Supports lexical, semantic, clinical, and temporal evaluations

- ⚡ Easy to Use: Simple API with flexible configuration options

- 🔬 Research-Ready: Built-in statistical testing for system comparison

- 📦 PyPI Available: Install with a simple

pip install RadEval

🏥 Advancing Radiology AI Research Community

We are committed to building a standardized and reproducible toolkit for researchers, clinicians, and developers dedicated to advancing AI evaluation in medical imaging and radiology. Together, we’re setting new standards for clinical AI assessment.

- PyPI Package — Install RadEval with pip

- HuggingFace Model — Access our domain-adapted evaluation model

- Interactive Demo — Try RadEval online

- Research Paper — Read our detailed research paper