CCD: Mitigating Hallucinations in Radiology MLLMs via Clinical Contrastive Decoding

Why CCD? 🔬

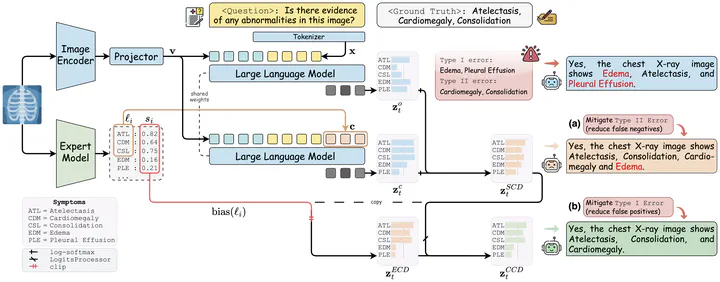

Radiology MLLMs remain vulnerable to prompt-induced hallucinations when clinical sections contain counterfactual details or ambiguous guidance. The figure above contrasts baseline predictions with CCD-enabled outputs across report generation and question answering tasks. Red highlights mark unsupported findings that can compromise patient care, while blue text reflects misleading prompt context the model must resist.

Clinical Contrastive Decoding (CCD) mitigates these risks at inference time. Rather than retraining models or relying on retrieval corpora, CCD injects trustworthy, image-grounded signals distilled from specialist expert models. The result is a decoding policy that maintains fluency yet stays faithful to the radiograph.

CCD at a Glance ⚙️

Clinical Contrastive Decoding (CCD) is a plug-and-play inference framework designed to reduce medical hallucinations in radiology MLLMs. It introduces structured clinical supervision from expert models (e.g., DenseNet or MedSigLIP, or others) at decoding time, without modifying model weights or requiring external retrieval.

Given a chest radiograph, the expert model predicts symptom-level probabilities across 14 CheXpert categories. CCD integrates this signal through a dual-stage logit refinement strategy:

- Symptom-grounded Contrastive Decoding (SCD): constructs an anchor prompt using high-confidence findings (e.g., “Atelectasis, Cardiomegaly”) and generates a contrastive logits path conditioned on this prompt. The final logits are a weighted interpolation between the anchor-conditioned and original paths, encouraging the model to mention supported findings and suppress false negatives.

- Expert-informed Contrastive Decoding (ECD): transforms expert probabilities into token-level logit biases via log-odds conversion. These biases are injected into the logits from the first stage, softly penalising unsupported findings and reducing false positives.

This two-stage mechanism enables CCD to progressively guide generation with both symbolic supervision (via anchor prompts) and probabilistic constraints (via confidence scores), achieving robust improvements in both report generation and VQA. CCD is fully model-agnostic and integrates seamlessly with state-of-the-art radiology MLLMs such as MAIRA-2, Libra, LLaVA-Rad, and LLaVA-Med.

Unlike prior contrastive decoding approaches that rely on perturbed visual or textual inputs, CCD leverages clinically grounded signals from expert models to provide task-specific and symptom-level control during generation.

Key Contributions ✨

Empirical Insight: We conduct a systematic analysis of prompt-induced hallucinations in radiology MLLMs, revealing that noisy clinical sections (such as irrelevant or contradictory clinical details) can trigger unsupported findings across multiple datasets.

Inference-time Framework: We introduce Clinical Contrastive Decoding (CCD) — a dual-stage inference-time strategy that leverages expert-derived labels as anchor prompts and performs probabilistic logit adjustments, requiring no retraining or architectural modifications.

Consistent Gains: Extensive experiments on MIMIC-CXR, IU-Xray, and CheXpert Plus demonstrate that CCD achieves up to +17% improvement in RadGraph-F1 with SOTA radiology MLLMs (MAIRA-2), along with higher VQA accuracy, all without altering model weights or structures.

Evaluation Highlights 📈

- Achieves up to +17% RadGraph-F1 on MIMIC-CXR when attached to state-of-the-art report generators.

- Reduces mention-level hallucinations on IU X-Ray while maintaining BLEU / ROUGE scores, confirming that CCD improves factuality without harming surface-level metrics.

Ablation Studies 🧪

Ablations show both contrastive stages matter: removing the anchor projection (SCD) or the logit sharpening (ECD) degrades factual metrics by ~5–8%.

My Musings ⏳

As I reflect on the development and impact of Clinical Contrastive Decoding (CCD),

It’s better to be roughly right than precisely wrong.

— Carveth Read, Logic: Deductive and Inductive

This quote perfectly captures the spirit of our approach. In the high-stakes field of radiology, the cost of hallucinations can be severe. By integrating expert-derived signals at inference time, CCD prioritises clinical accuracy over mere linguistic fluency. It’s a reminder that in medical AI, being approximately correct and grounded in reality is far more valuable than generating fluent but misleading text.

BibTeX 📚

@article{zhang2025ccd,

title={CCD: Mitigating Hallucinations in Radiology MLLMs via Clinical Contrastive Decoding},

author={Zhang, Xi and Meng, Zaiqiao and Lever, Jake and Ho, Edmond SL},

journal={arXiv preprint arXiv:2509.23379},

year={2025}

}