Libra: Leveraging Temporal Images for Biomedical Radiology Analysis

Why Libra?

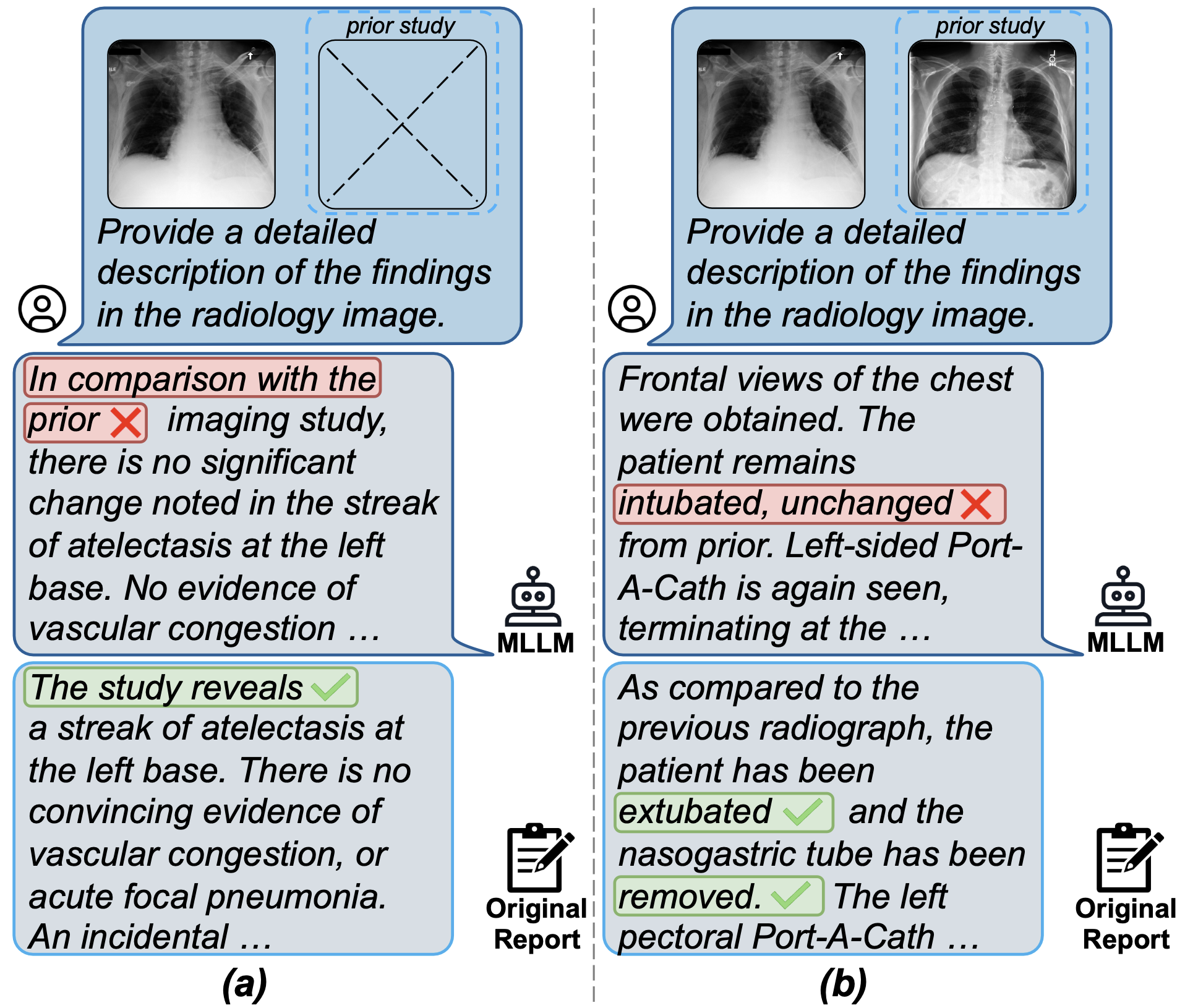

Temporal hallucination is a critical challenge in radiology report generation (RRG). Traditional multimodal large language models (MLLMs) struggle to integrate prior images correctly, often generating:

- Spurious references to nonexistent prior studies (Single-image case).

- Inaccurate interpretations of disease progression (Temporal-image case).

Libra addresses these limitations by integrating a Temporal Alignment Connector (TAC) to improve temporal awareness, ensuring:

- Prior studies are correctly referenced only when available.

- Hallucinated references are eliminated, avoiding misleading reports.

- Temporal changes are accurately captured, ensuring clinically meaningful outputs.

Overview

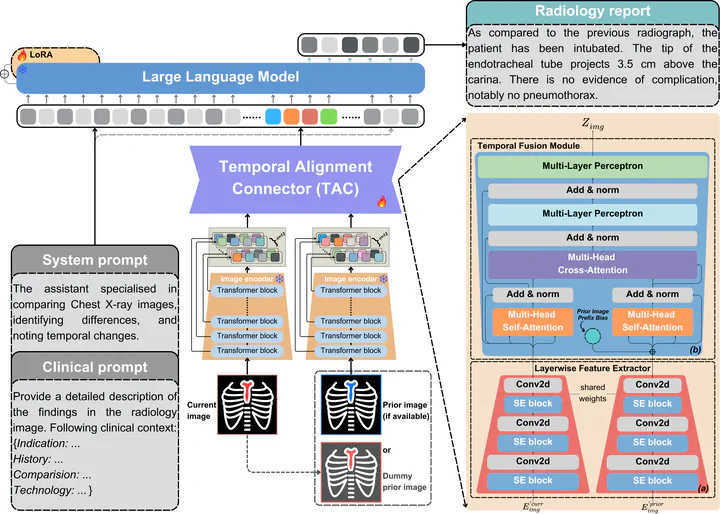

We propose Libra (Leveraging Temporal Images for Biomedical Radiology Analysis), a novel framework tailored for radiology report generation (RRG) that incorporates temporal change information to address the challenges of interpreting medical images effectively.

Libra leverages RAD-DINO, a pre-trained visual transformer, as its image encoder to generate robust and scalable image features. These features are further refined by a Temporal Alignment Connector (TAC), a key innovation in Libra’s architecture. The TAC comprises:

Layerwise Feature Extractor (LFE): Captures high-granularity image feature embeddings from the encoder.

Temporal Fusion Module (TFM): Integrates temporal references from prior studies to enhance temporal awareness and reasoning.

These refined features are fed into Meditron, a specialised medical large language model (LLM), to generate comprehensive, temporally-aware radiology reports. Libra’s modular design seamlessly integrates state-of-the-art open-source pre-trained models for both image and text, aligning them through a temporal-aware adapter to ensure robust cross-modal reasoning and understanding.

Through a two-stage training strategy, Libra demonstrates the powerful potential of multimodal large language models (MLLMs) in specialised radiology applications. Extensive experiments on the MIMIC-CXR dataset highlight Libra’s performance, setting a new state-of-the-art benchmark among models of the same parameter scale.

Key Contributions

Temporal Awareness: Libra captures and synthesizes temporal changes in medical images, addressing the challenge of handling prior study citations in RRG tasks.

Innovative Architecture: The Temporal Alignment Connector (TAC) ensures high-granularity feature extraction and temporal integration, significantly enhancing cross-modal reasoning capabilities.

State-of-the-Art Performance: Libra achieves outstanding results on the MIMIC-CXR dataset, outperforming existing MLLMs in both accuracy and temporal reasoning.

Libra Repository: Our code space provides a public and detailed implementation of Libra, facilitating reproducibility and further research in the field, and integrates both training and evaluation processes, ensuring a streamlined and efficient workflow for developing and testing radiology report generation models.

Experimental Results

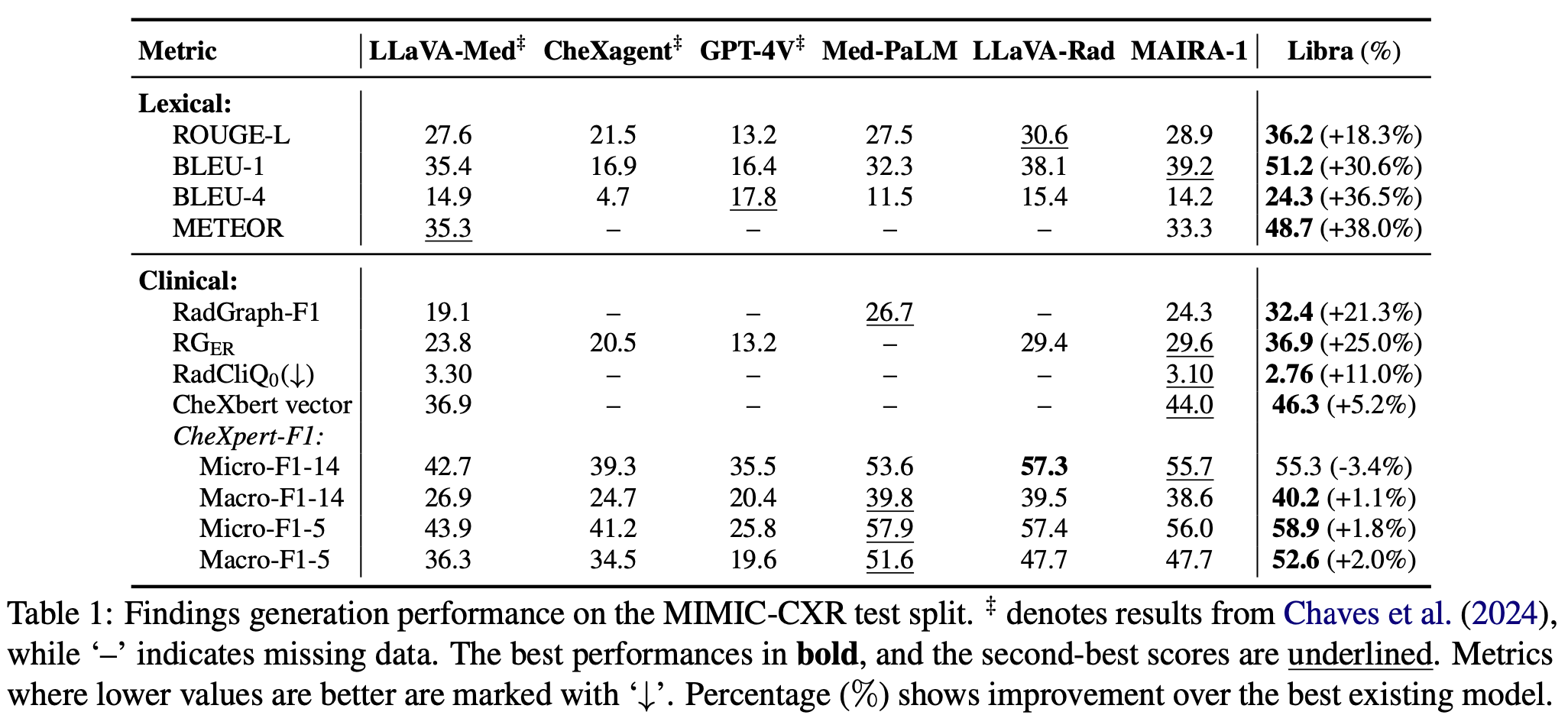

Libra was designed to excel in radiology report generation (RRG), and its performance speaks for itself. Here’s a quick breakdown of its achievements:

- Lexical and Clinical Metrics: Libra delivers competitive results across traditional metrics like ROUGE-L, BLEU, METEOR, and RadGraph-based scores.

- Radiologist-Aligned Metrics: It leads in the RadCliQ metric and CheXbert vector similarity, showcasing its alignment with clinical expectations.

- CheXpert Classification: Libra achieves the highest Macro-F1 scores and remains competitive in Micro-F1, further solidifying its reliability in clinical classification tasks.

By leveraging its Temporal Alignment Connector (TAC), Libra effectively captures temporal contexts, generating radiology reports that are both accurate and clinically meaningful. While there are minor gaps in select clinical metrics, Libra’s robust performance demonstrates its potential to set new standards in RRG.

Performance Analysis

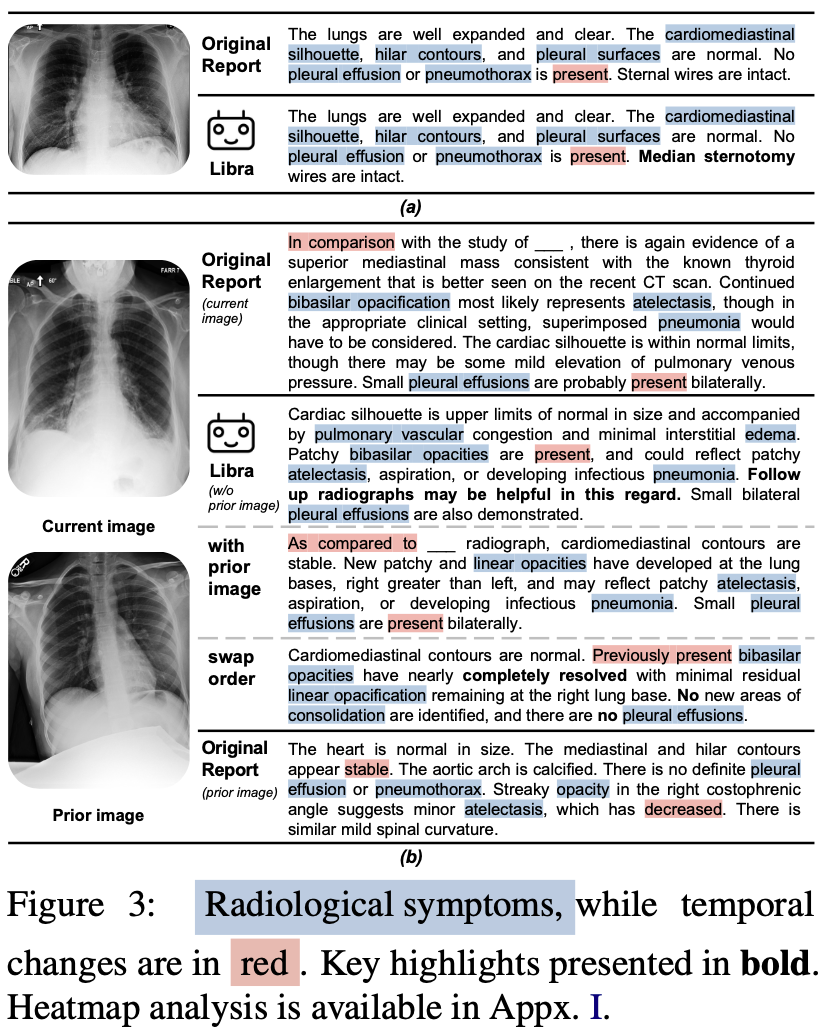

Cases Without Prior Images

In scenarios where no prior image is available, Libra shines by delivering detailed and clinically relevant descriptions without introducing spurious references. For instance, as shown in Figure 3 (a), Libra identified “sternal wires” and their type, going beyond the ground truth. This demonstrates its ability to provide meaningful insights while avoiding errors caused by nonexistent prior studies.

Cases With Prior Images

When prior images are available, Libra takes its analysis to the next level. In Figure 3 (b), new abnormalities such as pleural effusion and pneumonia were identified in the current image. Without considering the prior image, Libra accurately described the findings without inferring disease progression, maintaining clinical accuracy. However, when the prior image was included, Libra effectively captured the progressive changes, provided detailed descriptions, and explicitly referenced the comparison. This capability ensures a clear understanding of temporal changes and enhances the accuracy of disease progression descriptions.

Evaluating Temporal Consistency

To test Libra’s temporal reasoning, we swapped the image order, treating the prior image as the current image and vice versa. The generated report reflected an improved patient condition, aligning with the reversed input sequence but contradicting the original ground truth. Interestingly, the report closely resembled the original description of the prior image, as shown at the bottom of Figure 3 (b). This highlights Libra’s ability to adapt to different temporal contexts, generating accurate and contextually consistent reports that align with standard clinical practices.

Libra’s performance in these cases underscores its potential to revolutionize radiology report generation by seamlessly integrating temporal reasoning into its analysis.

BibTeX

@inproceedings{zhang-etal-2025-libra,

title = "Libra: Leveraging Temporal Images for Biomedical Radiology Analysis",

author = "Zhang, Xi and

Meng, Zaiqiao and

Lever, Jake and

Ho, Edmond S. L.",

editor = "Che, Wanxiang and

Nabende, Joyce and

Shutova, Ekaterina and

Pilehvar, Mohammad Taher",

booktitle = "Findings of the Association for Computational Linguistics: ACL 2025",

month = jul,

year = "2025",

address = "Vienna, Austria",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2025.findings-acl.888/",

pages = "17275--17303",

ISBN = "979-8-89176-256-5",

abstract = "Radiology report generation (RRG) requires advanced medical image analysis, effective temporal reasoning, and accurate text generation. While multimodal large language models (MLLMs) align with pre-trained vision encoders to enhance visual-language understanding, most existing methods rely on single-image analysis or rule-based heuristics to process multiple images, failing to fully leverage temporal information in multi-modal medical datasets. In this paper, we introduce **Libra**, a temporal-aware MLLM tailored for chest X-ray report generation. Libra combines a radiology-specific image encoder with a novel Temporal Alignment Connector (**TAC**), designed to accurately capture and integrate temporal differences between paired current and prior images. Extensive experiments on the MIMIC-CXR dataset demonstrate that Libra establishes a new state-of-the-art benchmark among similarly scaled MLLMs, setting new standards in both clinical relevance and lexical accuracy. All source code and data are publicly available at: https://github.com/X-iZhang/Libra."

}

@inproceedings{zhang2025libra,

title={Libra: Leveraging temporal images for biomedical radiology analysis},

author={Zhang, Xi and Meng, Zaiqiao and Lever, Jake and Ho, Edmond SL},

booktitle={Findings of the Association for Computational Linguistics: ACL 2025},

pages={17275--17303},

year={2025}

}